Lasita Maja shipping system

For some time now, I have been working on an information system designed to manage shipping orders at AS Lasita Maja.

AS Lasita Maja makes log cabins. They are one of the leading log cabin producers in Europe, producing over 40000 buildings per year. They sell buildings to retail businesses who sell them to end customers. Buildings get shipped out by parts, packaged into different-sized packages. Shipping is done using freight trucks and 3rd party shipping providers.

A log cabin:

In 2016, I helped them to replace the slow and manual process of cargo planning for shipping trucks. Initially, I built a small browser-based app. The app supported a list of packages that you could drag-and-drop onto the working area representing the truck. At the end, you would print out the sheet for the forklift operator. The list of packages was stored inside the browser storage, and you did not have to enter them again. This was done using paper and pencil before the app. Now we made it at least 10 times faster.

Parts of log cabins packaged and being loaded onto a shipping truck:

As we kept adding features to the cargo plan app, we also started to look at automating the other parts of the shipping workflow. Most tracking and data processing was done using spreadsheets, shared by e-mail between the company employees. The company had been looking at some existing automated solutions but found nothing that they really liked and did everything they needed. This is when we decided to turn the cargo app into a more significant enterprise system.

Scope of the system

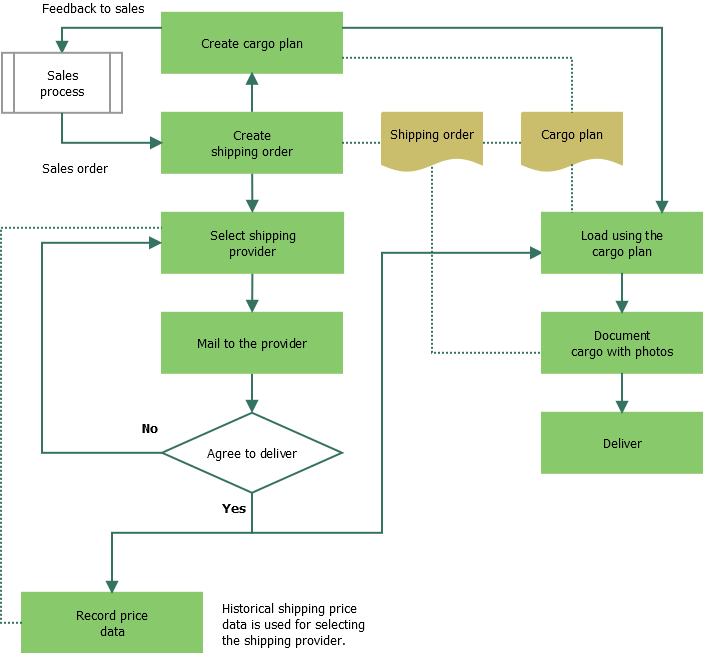

The scope of the new application was to handle the entire shipping process. The shipping process starts from sales and ends with a delivery.

This is the main process handled by the new system:

The application data model is designed around the shipping order and cargo plan entities but also contains:

- Shipping providers;

- Destination addresses;

- User data (company employees).

In the web-based user interface, there are tables representing these entities. The data is entered and modified through inline popover forms. It works similar to spreadsheets, except that the application speeds up data entry, guarantees data consistency, and enables easy sharing throughout the company.

The system was built as a series of development iterations. Each iteration began once a sizable amount of business requirements had been gathered. In each iteration, we also budgeted for unforeseeable changes, allowing us to shift focus onto more important changes than the planned. Each iteration lasted from 1 to 3 months.

I have used this sort of iterative project management on many projects. It gives a nice transparent overview of features, cost, and timeline for the client.

We worked as a team of 4 people: 2 logisticians, the shipping department manager, and me. I was the only technical person in the team.

The main concepts behind the system were envisioned by my friend Jana who works as a logistician for the company. She has a great talent to recognize opportunities for automation to make work more efficient. The idea for the original cargo plan app also came from her.

Tools that we used in the project management:

- Redmine project management software;

- Facebook Messenger for communication;

- Git version control system.

Architecture

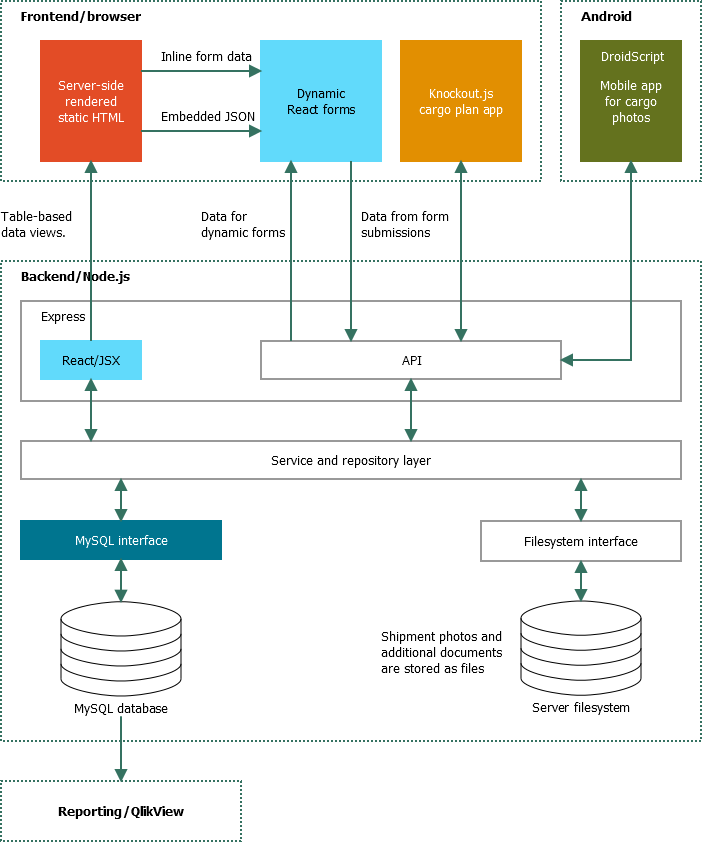

The system consists of the following parts:

- Backend (database, API endpoints, HTML for tabular views);

- Frontend (forms, tables, cargo plan app);

- Android application (cargo photos).

The application is built on the Node.js platform and uses the Express and MySQL. I have built a numerous other applications with the same setup. The server-side templating uses JSX through a small wrapper around React. Server-side templating is used for the main site layout and views that mostly contain tabular data.

The frontend is built using Bootstrap and React. React is used for forms. Some of the forms are very dynamic, containing unbounded lists of inputs. The frontend is connected to the backend through an HTTP REST API.

The cargo plan app was written in Knockout.js before we made the plans to cover the whole shipping workflow. It required only a small change to make it load and store data on the backend through the new API.

The system includes an Android application to take photos of cargo trucks before the shipment is covered by tent. The photos include package numbers and are used for verifying the shipment when necessary. The Android application uses DroidScript.

Architecture diagram:

Backend

The backend uses the Express web framework with some helpful packages. The backend code targets Node.js 8.x and makes great use of the JavaScript language async/await support.

Other packages used in the backend:

- React for generating static HTML;

- Nodemailer for sending e-mails;

- EJS for text-based e-mail templates;

- Moment for formatting dates;

- The xlsx package to import and export spreadsheets;

- The MySQL package to connect to the MySQL database;

- The sharp package to process uploaded photos;

- PDFKit to produce PDF documents.

Tools:

-

Visual Studio Code text editor;

- eslint for code linting;

- nodemon for reloading the server in development;

- MySQL Workbench.

The backend uses React JSX as the templating language. Although React is designed as a front-end view library, it includes a full support for server-side rendering. It provides much more powerful abstractions than string-based templating languages, such as EJS. The React-based views are loaded through the babel-register package that compiles JSX to standard JavaScript.

E-mails are sent using the Nodemailer package. E-mails are text-based and employ EJS as the templating language. Mails are sent over the SMTP protocol.

Some tables support data export into an Excel spreadsheet. This is done through SheetJS. It is also utilized to bulk-import package dimensions for the cargo plan application.

Cargo photos from the Android app are processed via the sharp package. It generates thumbnails from the images.

PDF documents are generated with the PDFKit package. There are 2 types of PDF documents: shipping orders for the shipping provider; cargo plans for the forklift operator. Coding the PDF export for the latter was actually one of the major technical challenges of the project as PDFKit lacks a layout engine. It required a custom layout optimizer to optimize for the maximum output text size without eliding crucial information from the text. The optimizations are required to counter the poor lighting and hard-working conditions in a forklift.

Database

Database access is done using the MySQL package. I wrote a small async/await-based transactional layer around it. SQL queries are kept in external .sql files, loaded into memory during the application startup. I have used this approach in many web applications. It provides full-text editor support for SQL. In my opinion, it is a better approach than a full-featured ORM or query generators, at least in applications that do more than basic CRUD queries. Some of the application queries include analytic parts that span over 20 lines and exploit derived tables. This use case is nearly impossible to maintain with Sequelize or Knex.

Example of a transaction script (service layer):

exports.save = async (order) => {

return mysql.transaction(async (connection) => {

await updateAddresses(connection, order.unload, order.name);

await updateAddresses(connection, order.onload, order.name);

const id = await ordersRepo.save(connection, order);

if (order.planId > 0) {

await ordersRepo.associate(connection, id, order.planId);

}

return id;

});

};

And the repository layer:

const saveQuery = new Query(path.join(__dirname, "sql", "save.sql"));

// ...

exports.save = async (connection, order) => {

const results = await saveQuery.run(connection, order);

return results.insertId;

};

The same connection object passed down to the repository layer guarantees that the whole operation is atomic.

Database migrations are applied as SQL scripts. Each script filename contains a numerical identifier and the description of the operation. The

script identifiers are recorded in the migrations table to avoid running them again. The migrations table has a single integer column

for the script identifier. Before each update, a database snapshot is taken with mysqldump. This guarantees that the database can be

quickly restored after a failing migration.

The MySQL instance was directly connected to Qlik View. Qlik View is a business analytics platform, providing insight into the whole company dataset gathered from various data sources. Besides the shipping system, the data comes from SAP (sales, accounting), and potentially from other sources.

API

The backend API uses built-in routing from Express with a small wrapper to support async functions. It uses the JSend convention to format responses. JSend has a small and simple specification.

Example handler for the API:

app.put(

"/api/order/update/:id",

json(),

api(async (req, res) => {

const id = parseInt(req.params.id, 10);

const data = req.body;

const errors = validate(data);

if (errors.hasError()) {

throw new ValidationError(errors);

}

data.author_id = req.user.id;

return ordersService.update(id, data);

})

);

The whole backend code is structured using the principles from here.

Frontend

The frontend uses Bootstrap and React. The target browser was selected as recent Chrome, but the system was tested with Firefox as well. No support for IE11 was necessary.

Other libraries used in the frontend:

- Flatpickr for date/time editors;

- Knockout.js for the cargo editor;

- Photoswipe to browse the uploaded cargo photos;

Tools:

-

Visual Studio Code text editor;

- eslint for code linting;

- Webpack to build frontend bundles;

- Google Chrome devtools for debugging;

- Selenium Webdriver and Mocha for testing.

User interface

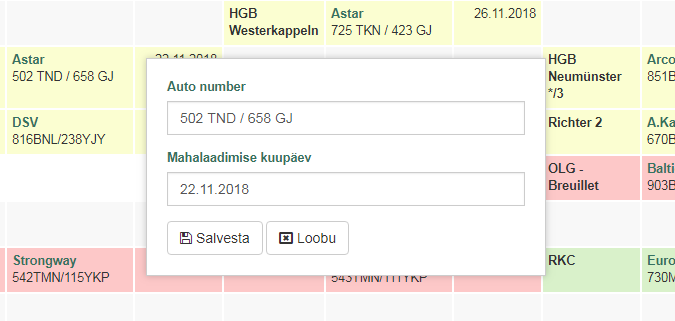

Most of the frontend views were displaying tabular data and generated as HTML by server-side templating. Forms were built using React and compiled into separate bundles. The bundles contain common code for text inputs and generic form handling. React and other libraries are loaded as separate script files. This makes the bundles small (10-40kB) and quick to load.

Almost all the forms are built as inline popover forms. They are designed to quickly edit data in tables. The code to display and hide popovers is integrated into the common form handling code, making individual form components terse and simple.

A popover form inside a table:

The common form handling code employs a higher-order component that manages the form state and lifecycle. The lifecycle contains:

- Loading of initial form data;

- Association with metadata (like the associated table row);

- Form data updates through input elements;

- Client-side validation;

- Form submission;

- Server-side validation;

- Error message display;

- Navigation after successful submission.

Form data is loaded from 3 sources:

- Through REST API from the backend;

- From JSON embedded in the server-side rendered HTML;

- From

data-attributes for inline forms in the table.

Validation is done through 2 layers:

- Shared validation;

- Server-side validation using database.

Shared validation uses the same codebase for both client-side and server-side validation. It checks the constraints that do not require database access. This includes:

- Empty fields;

- Data format: text, integer, phone number, e-mail address, etc.

Server-side validation checks for uniqueness constraints, existence of the associated entities, etc. It is done in the service layer on the backend. The potential validation error messages are contained inside a response object where property names relate the messages to the form fields.

Cargo plan app

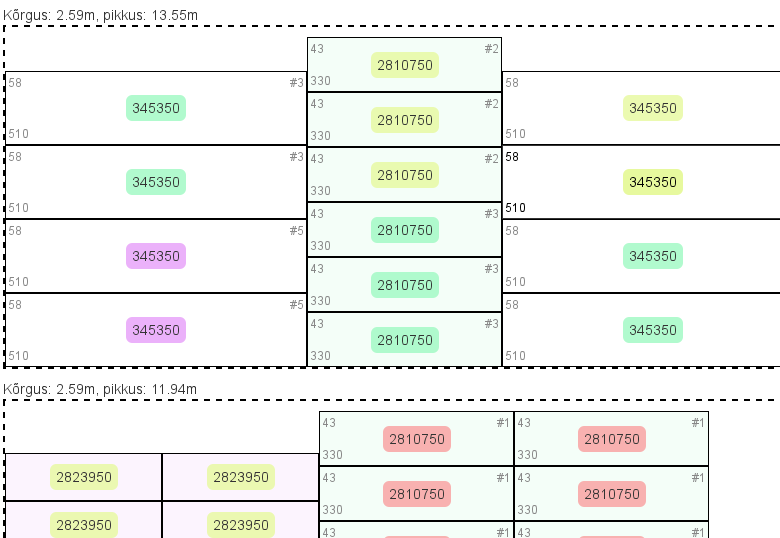

The cargo plan application was developed before the rest of the system. It is built on the Knockout.js library. Knockout is very performant for interactive DOM scripting and its implicit, almost magical, automatic observables lead to compact code. Knockout works well for complex user interfaces, but it provides no maintainable structure for large applications.

The application represents the truck as two rectangular work areas. The packages on the real truck are loaded from left and right side. In nearly all the cases, only two packages lay side-by-side at the given location. This leads to a simple 2-dimensional representation where only package length and height has to be considered.

Work area in the cargo plan app:

The packages can be freely dragged around, they snap into place. The packages are color coded (as are many tables in the application) to make usage easier and more efficient.

Testing

The frontend uses a set of end-to-end tests. They cover the main workflow, from authentication to reports. The tests are implemented using Webdriver and Mocha.

Cargo photos app

A mobile application is used for taking photos of the loaded cargo before it is sent out. It is part of the inspection process to verify that the packages were loaded correctly.

The application is integrated with the backend through the API. It loads the list of shipment orders which is presented after the app is started. A shipment is selected from the list and the photos are taken. The photos are automatically uploaded to the backend.

The application runs on Android. It is built using the DroidScript development platform. DroidScript is a JavaScript-based Android development platform that contains bindings of the native Android user interface objects. It is considerably simpler to use than React Native or the native Java-based SDK.

DroidScript comes with its own text editor. However, it is very limited. It does not even support multiple code files per project. It was easy to get started, but it became a bit inconvenient when the codebase grew. Fortunately, the application was small enough to tolerate this until it was done.

Storing data on Android was one of the technical challenges. The Wi-Fi connection outside, when taking photos, was not reliable, which meant that the application had to work offline for some period of time. Offline usage is one of the pain points in mobile development. Luckily, the dataset was small and relatively simple for storage synchronization.

Deployment

The application backend was deployed to a physical server. The server was delivered by Ordi. It is based on Supermicro hardware.

Tools used in deployment:

- Debian operating system;

- Tar and SSH for updates;

- Samba/rsync for backups;

- UptimeRobot for downtime alerts;

- Sentry.io frontend error monitoring.

The server runs on the Debian operating system. It is a standard installation. Supermicro is a very popular server hardware producer, and everything worked out of the box.

SSH is used for remote access to install application updates. These are delivered as a tar archive over the SCP protocol. Everything is automated by a small Node.js script. Updates were applied right after implementing a feature.

Backups are implemented by running rsync daily to copy files to a Samba-based storage on the local network.

The server is monitored with UptimeRobot and Sentry.io. UptimeRobot checks periodically that the system is not down. In the case it is, an alert is sent to my mail. Sentry.io catches and logs all frontend errors.

A video introduction of the system can be found on YouTube.

Summary

I enjoyed working on this project, and that's why I decided to do a longer technical write-up about it. We had a clear vision of the system goals at the beginning, and a focused team that knew what they were doing. The scope of the project was just right.

The choice of technology was great. There was a minor inconvenience to produce PDF exports, as the PDFKit has no layout engine. The second annoyance was DroidScript. That platform looks simple at first but lacks support for developing large applications.

It is not my most complex project, or the biggest, or the most critical one. It nowhere near my most profitable one. However, in the end, as a whole, it came together really well.